If you are a programmer, you might have come into touch with SQL in one way or another at some point in time.

Especially if you're a Data Scientist / Analyst, you probably had contact with it, but then you probably decided to use much cooler and fancier ways to sort data using pandas or tidyverse.

However, with huge datasets being collected and churned out every day, as long as the data is in an SQL compliant database, SQL is still the quickest way to help you investigate, sort and aggregate in order to get a thorough understanding of your data.

By slicing and dicing with SQL, you will be able to identify patterns that are worth looking at further, which can often lead to a redefinition of the research population and to variables that are considerably smaller than the initial set.

Therefore, rather than converting large datasets to R or Python readable arrays/lists, the first step of analytics should be to use SQL to gain insights from your data.

1. Use WITH statements for better readability

Code readability is very critical. Readable code can be easily understood, without taking too much effort to understand what the programmer is trying to do.

I think that the readability of code is also partly determined by how much effort the coder is putting into their job. If they don't make a lot of effort to make it readable, then it will naturally be less readable.

Fairly complex queries containing two or more nested sub-questions make it difficult for other people to read, debug and understand your code.

But, fortunately, there is a WITH clause (this process is also called Subquery factoring).

WITH Statements are like a temporary result set holder, they allow you to give a sub-query block a name, which then can be referenced in several places within the main SQL query. WITH statements need to be followed by a SELECT, INSERT, UPDATE or DELETE statement.

This way you can use them to easily break down very complex queries to make them more readable.

See following example:

WITH

employee AS (SELECT * FROM Employees)

SELECT * FROM employee WHERE ID < 20

UNION ALL

SELECT * FROM employee WHERE Gender = 'F'

Or this example which is a bit more complex and where the WITH would actually make sense:

WITH totalPay(Airline, total) as

(SELECT Airline, sum(Pay)

FROM Pilot

GROUP BY Airline),

airlineAverage(avgPay) as

(SELECT avg(Pay)

FROM Pilot )

SELECT Airline

FROM totalPay, airlineAverage

WHERE totalPay.total > airlineAverage.avgPay;

I know that this is a fairly simple query example but I hope you should get the point.

By using the WITH clause, you can break down nested queries into individual temp views for better code readability.

Another useful scenario of the WITH clause is when the WITH query is referenced multiple times. Without repeatedly writing the same sub-query again and again (following the DRY principle), you can introduce data upfront and reuse it later.

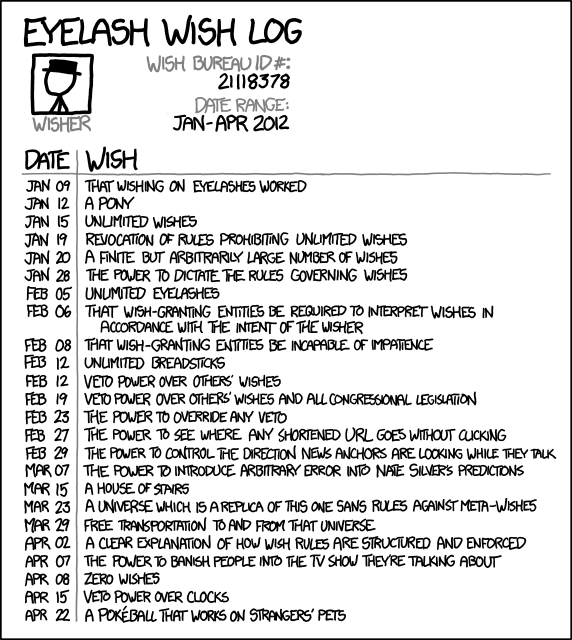

2. Don't Schedule Long Running Processes at Round Hours

You can experience a strange behavior on the stock market when a round number such as $25, $50, $100 is reached.

In the stock market, it has to do with newbie traders tending to buy or sell assets when the price is at a whole number and orders being placed at round numbers.

This article at investopedia.com explains it nicely:

[...] Most inexperienced traders tend to buy or sell assets when the price is at a whole number because they are more likely to feel that a stock is fairly valued at such levels. Most target prices or stop orders set by either retail investors or large investment banks are placed at round price levels rather than at prices such as $50.06. Because so many orders are placed at the same level, these round numbers tend to act as strong price barriers. [...]

Programmers have a similar habit of scheduling their programs to run at round hours (maybe all humans have the habit of sticking to round numbers? 🤓) - But, unfortunately, this behavior can cause huge resource-usage spikes.

That's why you should break this habit and try to schedule your processes at times like 5:06pm or 1:18am, for example.

An even better idea would be to schedule your tasks (if possible) at random times - that way, even if another task is scheduled to run at the same time, it won't cause any issues next time.

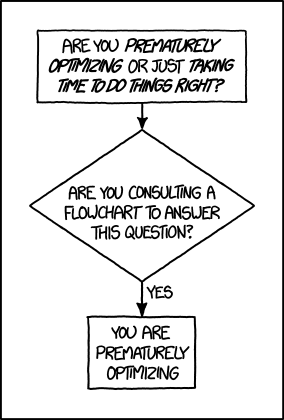

3. Avoid Using GROUP BY, ORDER BY, and DISTINCT

This one you probably already encountered - GROUP BY, ORDER BY, and DISTINCT are very slow, but why?

Sorting processes are very resource-intensive, since they need a fairly large amount of CPU time for one thing.

The main problem, however, is that the results need to be cached into a work table. After all, all lines must be read first before anything can be sorted and returning the final result. A sorting process can not be done using a pipeline. This can be especially a problem with large amounts of data.

That’s why you should avoid using GROUP BY, ORDER BY, and DISTINCT as much as possible.

But how can I avoid a group by (or similar) if I absolutely need to?

The simple answer is: You don’t.

It's like saying, "How do I stop using doors?" - That doesn't make any sense, either. Sure, if you don't want to enter or leave a building, you can just stop using the doors.

If you want to leave or enter a building, you can stop using doors by creating clunky workarounds such as, for example, using windows or chimneys to enter a house.

Same thing: if you absolutely need to use GROUP BY, just use it. That is why I also don’t have an example for this one 🤥.

4. Getting every Nth row.

This one sound quite complicated but is pretty easy if you use the modulo Operator.

The modulo operator (%) returns the remaining remainder of the division after one number is divided by another.

For example, 7 % 2 = 1, since 7 divided by 2 has a quotient of 3, 1 remains. Whereas 6 % 3 = 0, since dividing 6 by 3 has a quotient of 2, the remainder would be 0 - there is nothing to subtract from 6 after the calculating 2 * 3.

Doing this in SQL to get every second item would look something like this:

SELECT *

FROM items

WHERE id % 2 = 0

| id | price | cost |

| 2 | 700 | 670 |

| 4 | 200 | 100 |

| 6 | 900 | 320 |

| 8 | 800 | 720 |

| 10 | 440 | 430 |

Note that for this to work, the ID must be incremental, and no records have to ever been removed. To combat this, you could get a row number and use it instead of the id.

5. Use UNLOGGED Tables for Intermediate Data

When you modify data, the changes are written to the write ahead log or WAL (at least in most SQL flavors) to maintain data integrity.

Writing to the WAL is often needed, but there are some circumstances where you might be willing to give up some of its uses to make your querys faster.

One example would be temporary tables.

Temporary tables are disposable tables that store data temporarily (Who would have thought?). For example, it is very common to load data from a CSV file into a temporary table, then cleaning the data, and then placing it into a target table.

In this case, the temporary table is disposable and you don’t need a backup.

Know what you are really doing and its implications before using UNLOGGED.

See following code to create an UNLOGGED table:

CREATE UNLOGGED TABLE temp_table ( /* Table Definition */ );

6. Use BETWEEN instead of LIMIT

If you are a happy user of OracleDB or MsSQL, you probably use ROWNUM and top() for pagination. But in MySQL and many other flavours, pagination can be done using LIMIT, which is very slow for big tables and not so convenient as ROWNUM.

But luckily there exists a much better way of doing the same - BETWEEN.

BETWEEN is a logical operator that just returns true or false and thus can be used in the WHERE clause.

See following SQL Query for example:

SELECT *

FROM items

WHERE id BETWEEN 1 AND 5

Result:

| id | price | cost |

| 1 | 700 | 670 |

| 2 | 200 | 100 |

| 3 |

900 |

320 |

| 4 | 800 | 720 |

| 5 | 440 | 430 |

As I have already mentioned in No.4 - If your id is not incremental or might have gaps, you can get a row number and use it instead.

7. Remove OUTER JOINS

The INNER JOIN will eliminate rows while the OUTER JOIN will not. This leads to a lot more rows to handle and worse performance. ⠀

So just remove your OUTER JOINS! 🙃

This is easier said than done, and depends on whether you are allowed to change the contents of your tables. One solution is to eliminate OUTER JOINS by inserting placeholder rows in both tables. Say you have the following tables that need an OUTER JOIN to ensure that all data is returned:

Client Table:

| clientId | clientName |

| 1 | Makenzie Stein |

| 2 | Elyse Bird |

| 3 | Esmay Correa |

| 4 | Clinton Wallis |

Sales Person Table that uses Client Table:

| clientId | salesPerson |

| NULL | Newbee Smith |

| 2 | Ehsan Cano |

| 1 | Sherri Gallagher |

| NULL | Cairon Blake |

The simple solution is to add a placeholder row to the client table and update all NULL values to the placeholder key in the sales table.

New Client Table:

| clientId | clientName |

| 0 | NO CLIENT |

| 1 | Makenzie Stein |

| 2 | Elyse Bird |

| 3 | Esmay Correa |

| 4 | Clinton Wallis |

New Sales Person Table that uses Client Table:

| clientId | salesPerson |

| 0 | Newbee Smith |

| 2 | Ehsan Cano |

| 1 | Sherri Gallagher |

| 0 | Cairon Blake |

Not only can this eliminate the need for an OUTER JOIN, from now on other developers will not be forced to write statements such as ISNULL(clientId, "No client present").